Forward Snyk Vulnerability data to Splunk Observability Cloud

- 3 minutes read - 505 wordsTL;DR

Leverage a Prometheus Exporter to send all your application security vulnerabilities from Snyk into Splunk.

Here are all the necessary links to get started:

- Snyk Exporter: https://github.com/lunarway/snyk_exporter

- Splunk OpenTelemetry Collector for Kubernetes: https://docs.splunk.com/Observability/gdi/opentelemetry/install-k8s.html#otel-install-k8s

Update (2022-09-22)

The option that I am describing here is just one way to achieve this. There might even be a more straight forward option available that I started to describe in a more recent post. Please find an additional approach in my post Snyk Integration Capabilities with WebHooks - some examples.

And please get in touch to let me know what you think. Any feedback is more than welcome.

The Basics

In a recent post I shared how to send Snyk vulnerability data to New Relic using Prometheus. Due to popular demand, I wanted to continue this series with some details on how to achieve the same result for Splunk Observability Cloud platform.

How to get started?

Snyk Exporter is an open source tool that allows for exporting scanning data into Prometheus metrics by scraping the Snyk HTTP API. This Prometheus exporter can easily be deployed into a Kubernetes cluster. This is the scenario described in this article. The exporter could also run outside of Kubernetes in a standalone fashion.

The use case in this article focuses on Kubernetes. The primary reason is that I want to leverage Splunk’s OpenTelemetry Collector for Kubernetes to scrape Prometheus endpoints and send the data to Splunk Observability Cloud, so you can store and visualize crucial metrics on one platform.

Follow these deployment steps:

1. Deploy Snyk exporter

Snyk exporter already contains deployment YAMLs that you can adjust (secrets.yaml contains the API token for your Snyk account) and deploy using:

kubectl apply -f examples/secrets.yaml

kubectl apply -f examples/deployment.yaml

2. Deploy Splunk’s OpenTelemetry Collector integration

You can use Splunks’s guided set-up instructions from the UI to configure a custom install and deploy script for the Kubernetes integration. You can get there by clicking into the Data Management section of your navigation menu, click +Add Integration and select Kubernetes. Enter all the relevant information from the UI follow each step. In step 3. Install Integration, slighty add an additional configuration parameter to step D. In order to auto-detect Prometheus metrics from K8s deployments, you should add the parameter to the Helm install command.

--set autodetect.prometheus=true

The final command to install the Helm chart should look something like this (pls. note that I am using an AKS cluster running on Azure in my setup):

helm install --set cloudProvider='azure' --set distribution='aks' --set splunkObservability.accessToken='SPLUNK_ACCESS_TOKEN' --set clusterName='Snyk-AKS' --set splunkObservability.realm='us1' --set gateway.enabled='false' --generate-name splunk-otel-collector-chart/splunk-otel-collector --set splunkObservability.profilingEnabled='true' --set autodetect.prometheus=true

Please make sure to enter your Splunk Access Token.

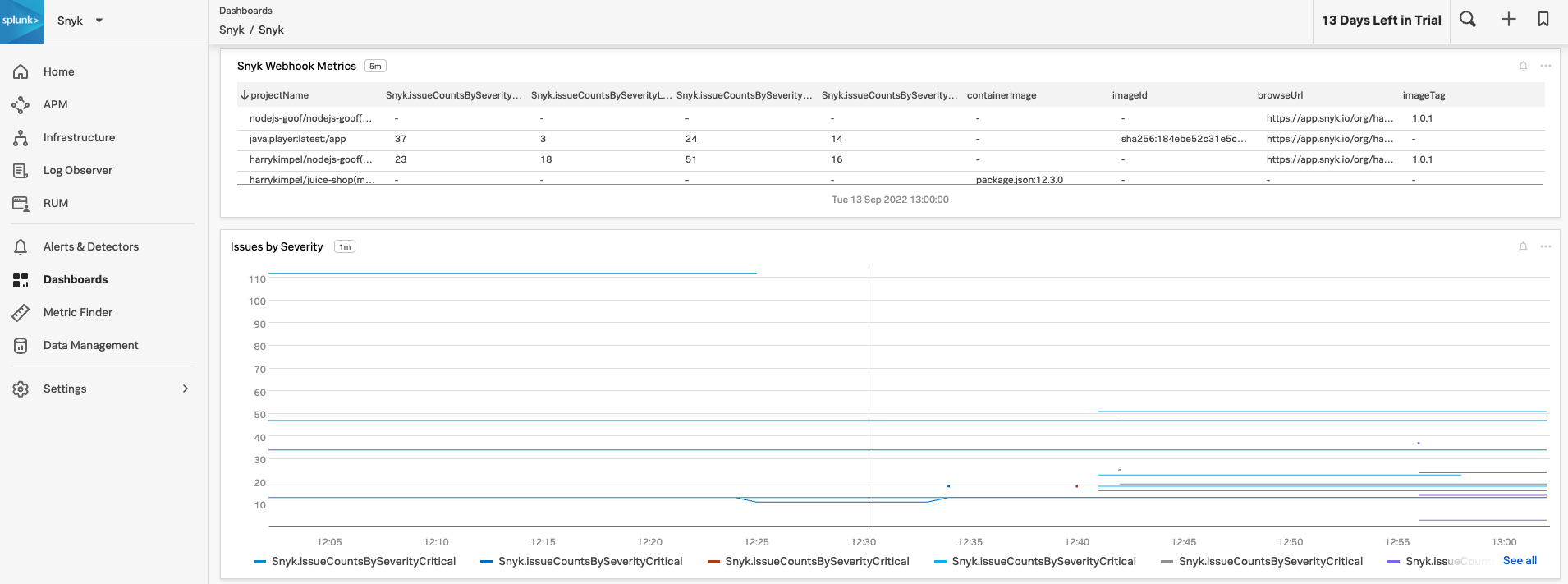

3. Check out the dashboard

Once the Helm chart is deployed and ready, you can create a dashboards within Splunk and look at the Snyk data, visualizing all the metrics from the Snyk exporter.

A sample JSON representation of this dashboard can be found here: Splunk Sample Dashboard (JSON).

Related Links/References

- Snyk Exporter: https://github.com/lunarway/snyk_exporter

- Splunk OpenTelemetry Collector for Kubernetes: https://docs.splunk.com/Observability/gdi/opentelemetry/install-k8s.html#otel-install-k8s

- Splunk Sample Dashboard (JSON)

- snyk

- net-core

- microsoft

- monitoring

- splunk

- splunk observability cloud

- observability

- opentelemetry

- prometheus